Waiting for Gemini 3 every day makes the pace of Chinese vendors feel intense.

AI coding has gone from red ocean to dead ocean.

ByteDance still came in with doubao-seed-code, a model specialized for code tasks.

My guess is this is tied to Trae not being able to use Claude recently.

Models should still rely on their own best tools.

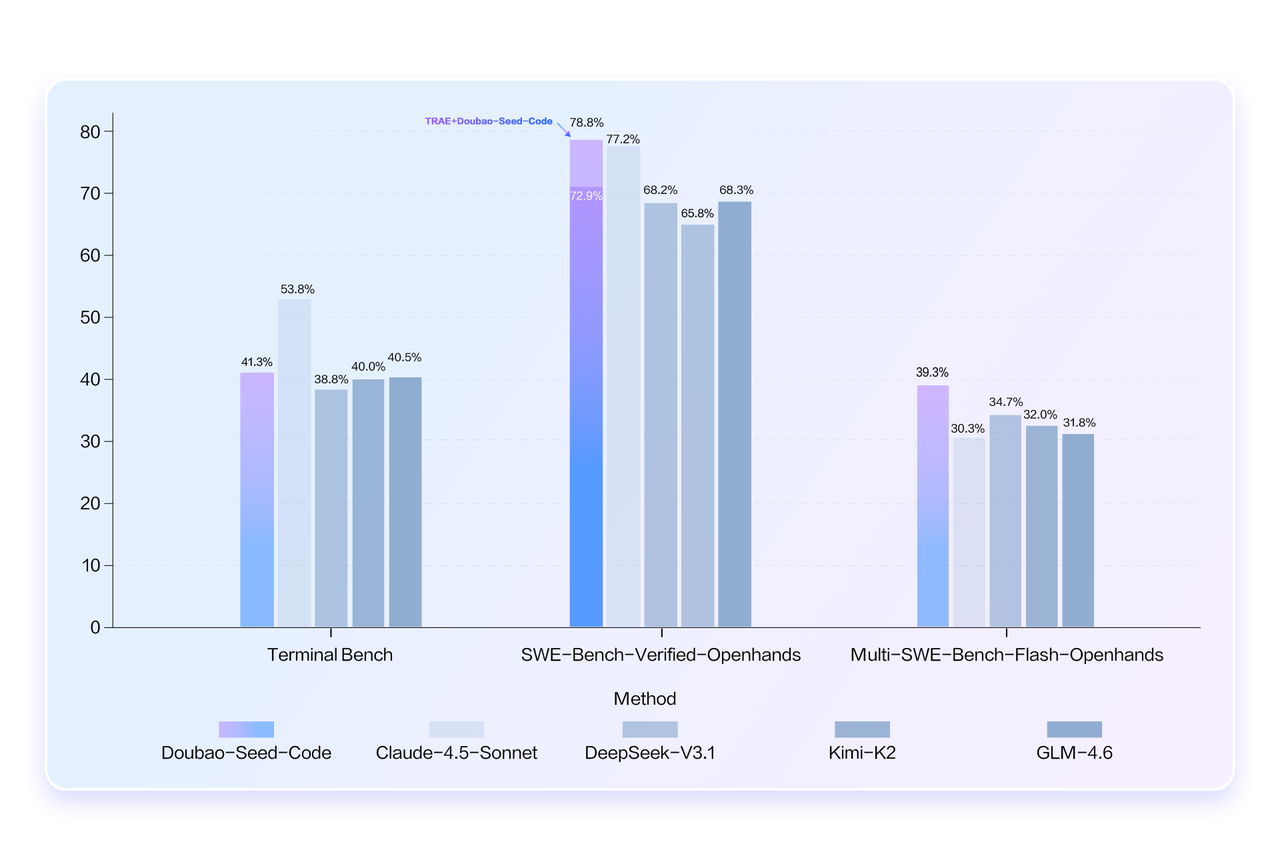

Based on the official benchmark:

Trae plus doubao-seed-code scored 78.8%, beating Claude-4.5-sonnet at 77.2%.

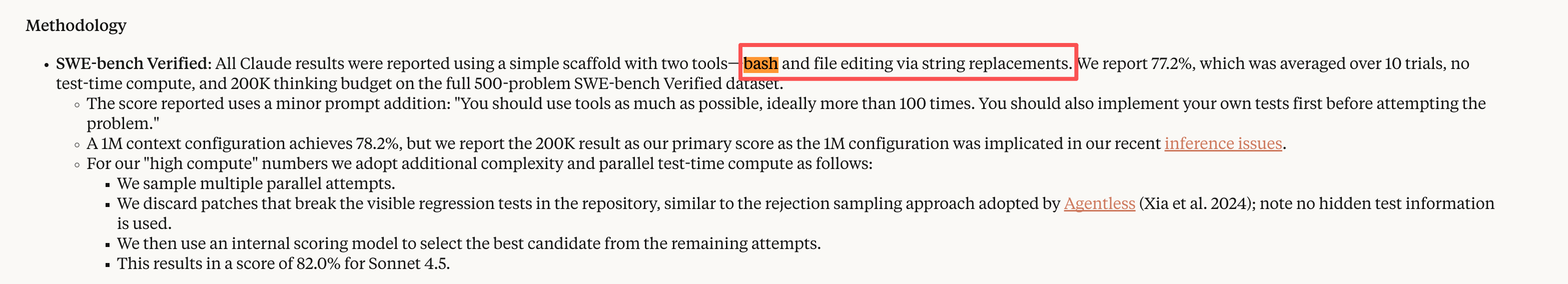

But there is a catch. This score is from SWE-Bench-Verified-Openhands. From what I found and the Anthropic docs, Claude-4.5-Sonnet scored 77.2% on SWE-Bench-Verified using only bash and simple string replacements.

If I am wrong here, please correct me in the comments.

The tasks are the same, but the tool setup is different. This difference matters for agents. SWE-Bench-Verified-Openhands uses the OpenHands toolkit.

So I would treat this benchmark cautiously and trust hands on use.

I tried it myself to see if it really beats other local models.

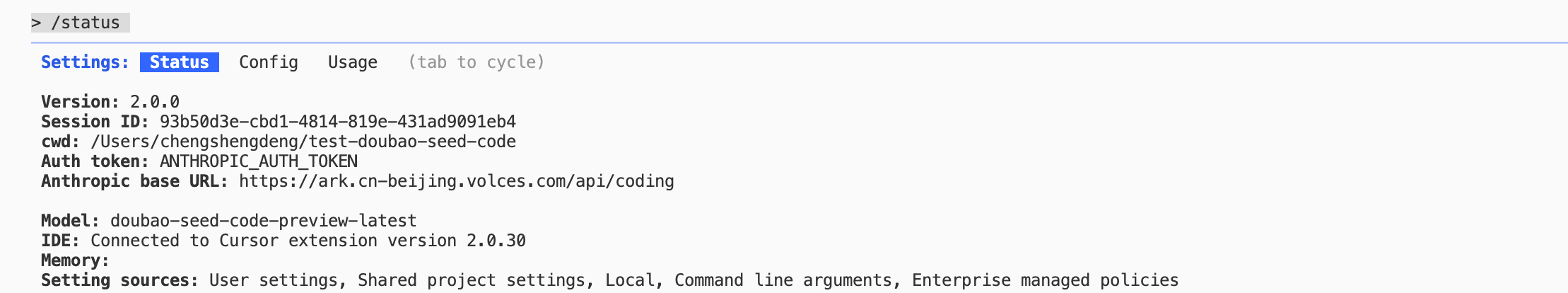

First, it also supports Claude Code integration. Here is how:

- Open the Claude Code config file in terminal:

vim ~/.claude/settings.json

- Replace the content with the following:

{

"env": {

"ANTHROPIC_AUTH_TOKEN": "ARK_API_KEY",

"ANTHROPIC_BASE_URL": "https://ark.cn-beijing.volces.com/api/coding",

"API_TIMEOUT_MS": "3000000",

"CLAUDE_CODE_DISABLE_NONESSENTIAL_TRAFFIC": 1,

"ANTHROPIC_MODEL": "doubao-seed-code-preview-latest"

}

}

Then verify:

If the model shows doubao-seed-code-preview-latest, the setup is done.

Now for a standard test I use a lot:

Design and build a highly creative, detailed voxel art scene of daytime Shanghai architecture. Make it stunning and diverse with rich colors. Use HTML, CSS, and JS, and output everything in a single HTML file that can be opened directly in Chrome.

Here is doubao-seed-code:

It generated an upside down skyline.

I asked it to fix it.

This is better, but it does not really look like Shanghai.

I ran the same case on Claude-4.5-Sonnet.

Claude is clearly better.

Next, a common ball physics test:

Use p5.js with no HTML to create 10 colored balls bouncing inside a rotating hexagon, with gravity, elasticity, friction, and collisions.

Not great.

Compare with Claude-4.5-Sonnet:

The gap is still large.

Another ball test in Python:

Write a Python program using Pygame or another suitable library to simulate several balls under gravity bouncing realistically inside a square rotating around its center. The balls should respond to collisions with the rotating walls, with realistic physics including velocity changes, gravity, and rotation aware collision detection.

It still does not seem to understand motion well.

One last case:

Write an HTML file using three.js to show how Earth formed in 3D based on scientific understanding. No user provided images required.

Compare with GLM-4.6:

Video 1

The two look similar. Both are acceptable.

One advantage of doubao-seed-code is that it supports image understanding natively, unlike many local models that are not multimodal.

Since it can read images, I tried UI replication.

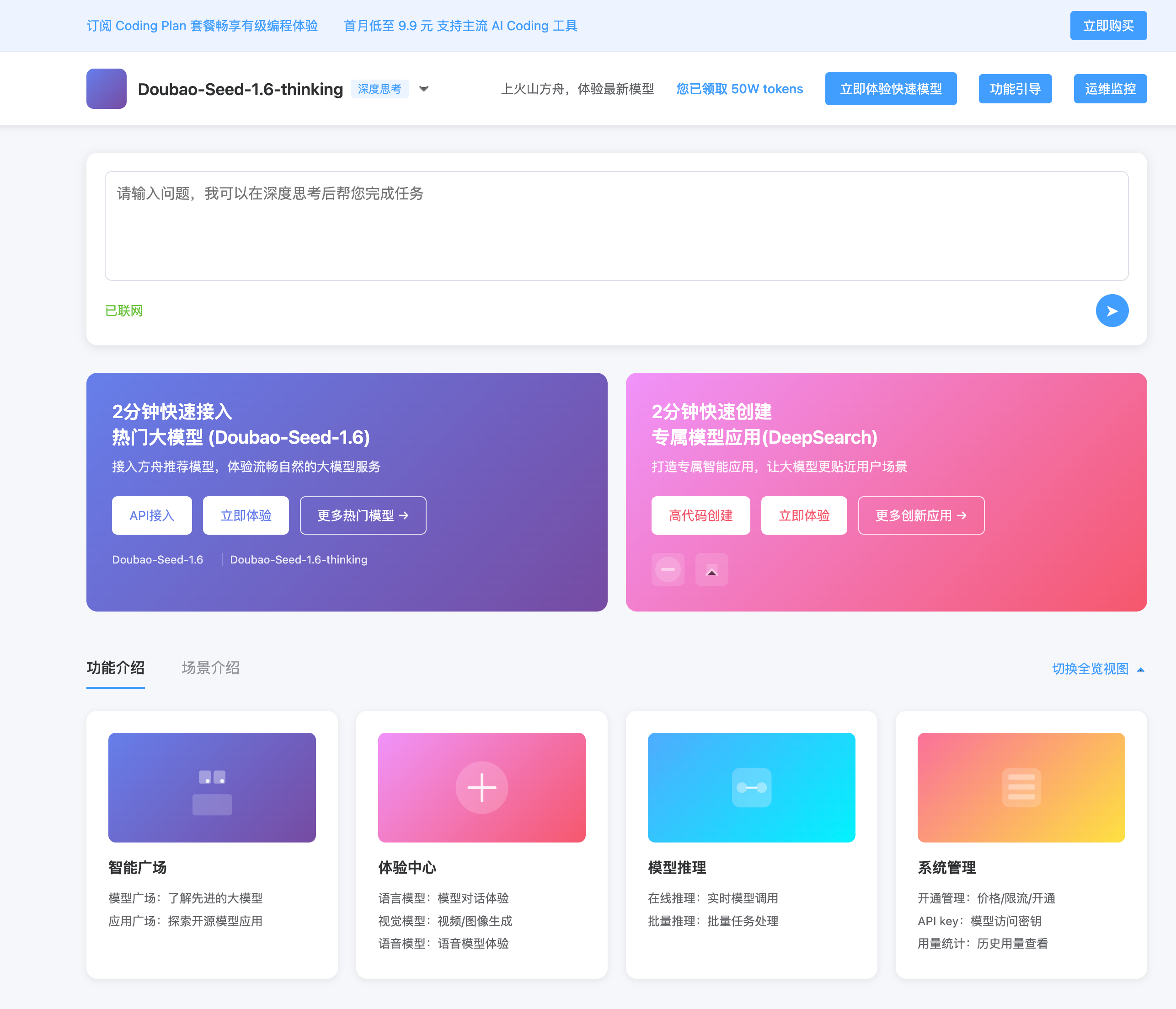

First, I asked it to replicate a Volcano Engine page:

Use HTML, CSS, and JS to recreate this page. You can use SVG to approximate images.

The layout is accurate, but the colors still need work.

I also tested Claude-4.5-Sonnet.

Claude is closer on visual colors, but doubao-seed-code is very accurate on content and small details like icons and toggle buttons. It may be better in that aspect.